The 10 best metrics for DevOps

Why use metrics for DevOps?

Metrics are essential for any DevOps team to ensure the development and operational aspects of software are going smoothly. Properly implementing and tracking metrics in DevOps allows teams to identify bottlenecks, ensure high availability, maintain performance standards, and improve overall efficiency. Metrics provide objective data that can help teams make informed decisions, pinpoint areas for improvement, and demonstrate the value of DevOps practices to stakeholders.

The top 10 metrics for DevOps

1. Deployment frequency

Deployment frequency measures how often new code is deployed to production. High deployment frequency indicates a team's ability to deliver new features and updates rapidly.

How deployment frequency is calculated

Count the number of deployments within a specific period (e.g., daily, weekly, monthly).

What tools can be used to get deployment frequency data

What average, good, and best in class look like for deployment frequency

- Average: 1-2 deployments per week

- Good: 3-4 deployments per week

- Best in class: Daily or multiple deployments per day

2. Lead time for changes

Lead time for changes reflects the time it takes to go from code committed to code successfully running in production. Lower lead times indicate a more efficient development pipeline.

How lead time for changes is calculated

Measure the elapsed time from the commit to deployment in production.

What tools can be used to get lead time data

What average, good, and best in class look like for lead time for changes

- Average: 1-2 weeks

- Good: A few days

- Best in class: Under 1 day

3. Change failure rate

Change failure rate measures the percentage of deployments causing a failure in production. Lower rates suggest reliable and stable deployments.

How change failure rate is calculated

(Number of failed deployments / Total number of deployments) x 100

What tools can be used to get change failure rate data

What average, good, and best in class look like for change failure rate

- Average: 15-20%

- Good: 5-10%

- Best in class: Below 5%

4. Mean time to recovery (MTTR)

MTTR measures the average time it takes to restore service after an incident or failure. Lower MTTR indicates a team's ability to quickly address and resolve issues.

How mean time to recovery is calculated

Total time to resolve incidents / Number of incidents

What tools can be used to get MTTR data

What average, good, and best in class look like for mean time to recovery

- Average: A few hours

- Good: Under 1 hour

- Best in class: Under 30 minutes

5. Availability/Uptime

Availability measures the proportion of time that a system is operational and accessible. Higher availability means better reliability and user satisfaction.

How availability is calculated

(Uptime / (Uptime + Downtime)) x 100

What tools can be used to get availability data

What average, good, and best in class look like for availability

- Average: 99.0%

- Good: 99.9%

- Best in class: 99.99% or higher

6. Incident frequency

Incident frequency tracks how often incidents occur within a certain period, providing insights into system stability and areas that need improvement.

How incident frequency is calculated

Count the number of incidents within a specified period (e.g., weekly, monthly).

What tools can be used to get incident frequency data

What average, good, and best in class look like for incident frequency

- Average: Depends on the system's complexity

- Good: 1-5 incidents per month

- Best in class: Less than 1 incident per month

7. Error rates

Error rates measure the percentage of requests that result in errors, showing how frequently the system is failing to perform correctly.

How error rates are calculated

(Number of failed requests / Total number of requests) x 100

What tools can be used to get error rates data

What average, good, and best in class look like for error rates

- Average: 1-2%

- Good: Below 1%

- Best in class: Less than 0.1%

8. Infrastructure as code (IaC) deployment success rate

Tracking the success rate of IaC deployments helps ensure that the infrastructure is being updated and maintained correctly.

How IaC deployment success rate is calculated

(Number of successful IaC deployments / Total IaC deployments) x 100

What tools can be used to get IaC deployment success rate data

What average, good, and best in class look like for IaC deployment success rate

- Average: 90-95%

- Good: 95-98%

- Best in class: Above 98%

9. Test coverage

Test coverage indicates the extent to which your codebase is covered by automated tests, ensuring code quality and stability.

How test coverage is calculated

(Number of lines of code tested by automated tests / Total lines of code) x 100

What tools can be used to get test coverage data

What average, good, and best in class look like for test coverage

- Average: 50-60%

- Good: 70-80%

- Best in class: Above 90%

10. Customer ticket volume

Customer ticket volume measures the number of tickets raised by customers, providing insights into user-reported issues and service quality.

How customer ticket volume is calculated

Count the number of customer tickets within a specific period (e.g., weekly, monthly).

What tools can be used to get customer ticket volume data

What average, good, and best in class look like for customer ticket volume

- Average: Depends on the user base

- Good: Fewer than 20 tickets per month

- Best in class: Fewer than 10 tickets per month

How to track metrics for DevOps

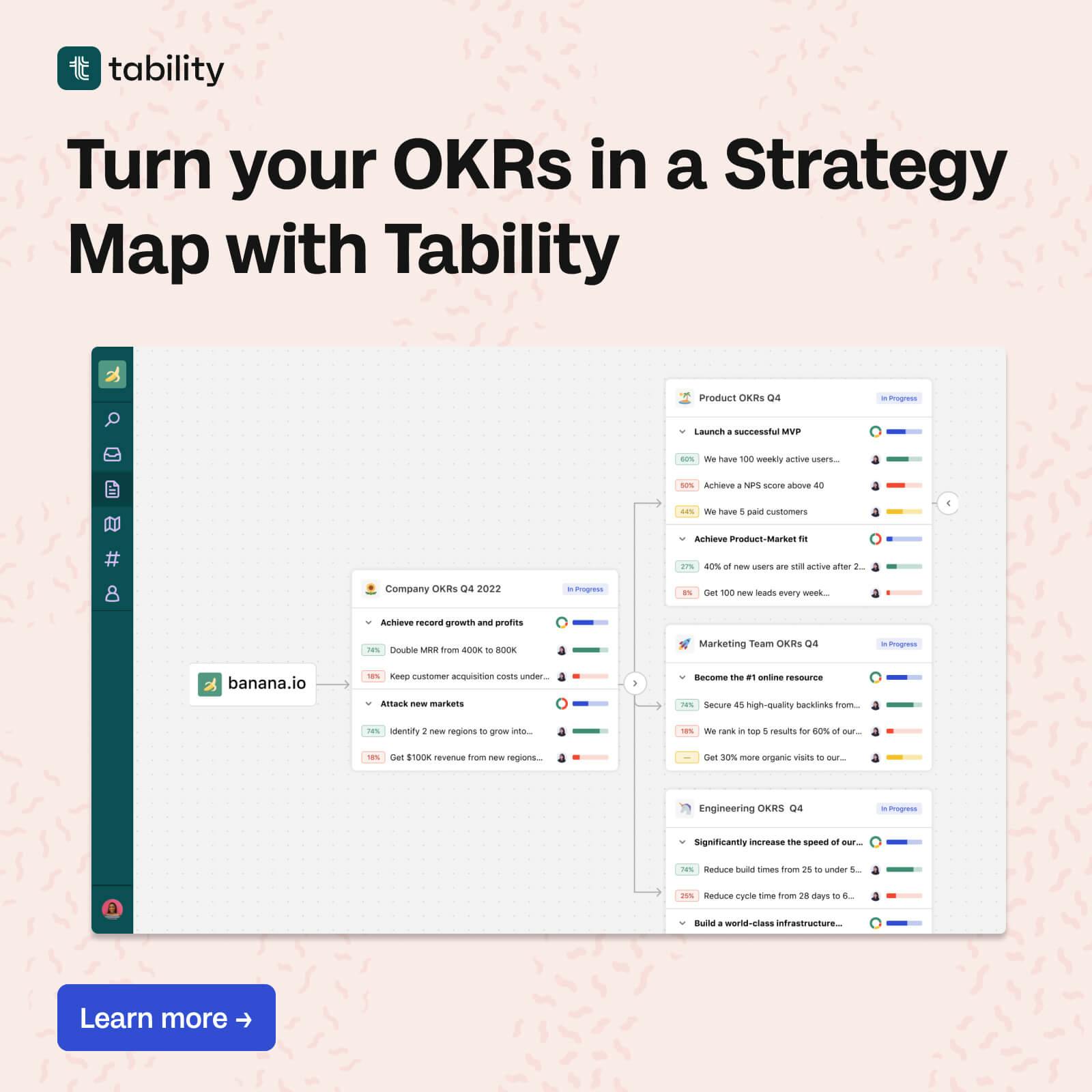

Tracking DevOps metrics effectively requires the right tools and practices. A goal-tracking tool like Tability can save time and help teams stay focused on important metrics. DevOps teams need to regularly review and adjust their metrics to adapt to changes and ensure continuous improvement. Automating the collection and analysis of these metrics allows more timely and accurate insights, enabling decisions that drive performance and reliability.

FAQ

Q: Why are deployment frequency and lead time important?

A: High deployment frequency and low lead time for changes indicate that a team can quickly deliver features and updates, which helps in maintaining a competitive edge and meeting user demands promptly.

Q: What is the significance of change failure rate and MTTR?

A: A low change failure rate means your deployments rarely introduce errors, and a low MTTR means issues are resolved quickly, both of which are critical for maintaining system reliability and customer satisfaction.

Q: How can high availability benefit an organisation?

A: High availability means your system is more reliable and accessible, leading to increased user trust and satisfaction as well as potentially higher revenue.

Q: What tools are best for tracking error rates and test coverage?

A: Tools like Sentry and New Relic are great for tracking error rates, while SonarQube is excellent for monitoring test coverage.

Q: How does customer ticket volume relate to system performance?

A: If a high number of tickets are being raised by customers, it often indicates underlying issues with system performance, usability, or reliability. Reducing customer ticket volume is a key indicator of improving service quality.