The 10 best metrics for software quality

Why use metrics for software quality?

In the realm of software development, maintaining high software quality is paramount for ensuring user satisfaction, reducing maintenance costs, and securing long-term success. This is where software quality metrics play a crucial role. By measuring different aspects of software quality, development teams can pinpoint areas of improvement, benchmark their performance, and make informed decisions to optimise their development processes.

The top 10 metrics for software quality

1. Defect density

Defect density measures the number of defects or bugs relative to the size of the software. It is a crucial metric for understanding the overall quality of the software and ensuring it meets quality standards before release.

- How defect density is calculated: Divide the number of defects by the size of the software (usually measured in lines of code or function points).

- What tools can be used to get defect density data: Tools like Jira (https://www.atlassian.com/software/jira) and Bugzilla (https://www.bugzilla.org) are useful for tracking defects.

- What average, good, and best in class look like for defect density:

- Average: 5-10 defects per KLOC (thousand lines of code)

- Good: 1-5 defects per KLOC

- Best in class: <1 defect per KLOC

2. Code coverage

Code coverage measures the percentage of code that is executed by automated tests. Higher code coverage typically indicates a more thoroughly tested and reliable codebase.

- How code coverage is calculated: Divide the number of lines of code executed by the total number of lines of code, then multiply by 100 to get a percentage.

- What tools can be used to get code coverage data: Tools like JaCoCo (https://www.jacoco.org) and Cobertura (http://cobertura.github.io/cobertura/) are commonly used for code coverage analysis.

- What average, good, and best in class look like for code coverage:

- Average: 60-70%

- Good: 70-85%

- Best in class: >85%

3. Mean time to resolution (MTTR)

MTTR measures the average time taken to resolve bugs or issues. A lower MTTR indicates quicker resolution and less impact on users, contributing to higher software quality.

- How MTTR is calculated: Add the total time taken to resolve all issues and divide by the number of issues.

- What tools can be used to get MTTR data: Tools like Jira (https://www.atlassian.com/software/jira) and ServiceNow (https://www.servicenow.com) can help track issue resolution times.

- What average, good, and best in class look like for MTTR:

- Average: Several days

- Good: 1-2 days

- Best in class: <24 hours

4. Defect resolution rate

This metric measures the percentage of identified defects that are resolved over a specific time period. A high defect resolution rate indicates an effective quality assurance process.

- How defect resolution rate is calculated: Divide the number of resolved defects by the total number of reported defects, then multiply by 100 to get a percentage.

- What tools can be used to get defect resolution rate data: Tools like Bugzilla (https://www.bugzilla.org) and GitHub Issues (https://github.com/features/issues) are useful.

- What average, good, and best in class look like for defect resolution rate:

- Average: 70-80%

- Good: 80-90%

- Best in class: >90%

5. Customer-reported defects

This metric counts the number of defects reported by customers after the product has been released. Fewer customer-reported defects indicate higher software quality and better user satisfaction.

- How customer-reported defects is calculated: Simply count the number of defects reported by customers.

- What tools can be used to get customer-reported defects data: Helpdesk tools like Zendesk (https://www.zendesk.com) and Freshdesk (https://freshdesk.com) can track customer-reported issues.

- What average, good, and best in class look like for customer-reported defects:

- Average: Varies widely based on application size and user base

- Good: Lower than industry benchmarks

- Best in class: Minimal to zero

6. Change failure rate

Change failure rate measures the percentage of changes made to the software that result in failures, requiring rollback or subsequent fixes. Lower rates indicate higher quality code changes.

- How change failure rate is calculated: Divide the number of failed changes by the total number of changes, then multiply by 100 to get a percentage.

- What tools can be used to get change failure rate data: CI/CD tools like Jenkins (https://www.jenkins.io) and CircleCI (https://circleci.com) track change success and failure rates.

- What average, good, and best in class look like for change failure rate:

- Average: 10-20%

- Good: 5-10%

- Best in class: <5%

7. Release frequency

Release frequency measures how often new versions of the software are released. Higher release frequency, coupled with low defect rates, can indicate an agile and efficient development process with consistent quality.

- How release frequency is calculated: Count the number of releases within a specific time period.

- What tools can be used to get release frequency data: Continuous integration and deployment tools like GitLab (https://about.gitlab.com) and Bitbucket (https://bitbucket.org) offer insights into release frequency.

- What average, good, and best in class look like for release frequency:

- Average: Monthly or bi-monthly

- Good: Bi-weekly

- Best in class: Weekly or daily

8. Escaped defects

Escaped defects are issues that were not detected during the testing phase and are discovered post-release. Lower escaped defects suggest a robust testing process and higher overall software quality.

- How escaped defects is calculated: Count the number of defects found post-release.

- What tools can be used to get escaped defects data: Tracking tools like Jira (https://www.atlassian.com/software/jira) and Azure DevOps (https://azure.microsoft.com/en-us/services/devops/) monitor defects across stages.

- What average, good, and best in class look like for escaped defects:

- Average: Varies based on project complexity

- Good: Significantly lower than industry averages

- Best in class: Near zero

9. Technical debt

Technical debt refers to the additional work required to improve the code quality. Managing technical debt is essential to maintaining software quality over time.

- How technical debt is calculated: Several methods exist, commonly through measuring the effort needed to fix bad code against the ideal solution.

- What tools can be used to get technical debt data: Tools like SonarQube (https://www.sonarqube.org) and CodeClimate (https://codeclimate.com) provide insights into technical debt.

- What average, good, and best in class look like for technical debt:

- Average: Subject to project size and history

- Good: Constantly monitored and reduced

- Best in class: Minimal to zero debt

10. Test automation coverage

Test automation coverage measures the proportion of testing chores that are automated. Higher automation coverage often correlates with faster, more reliable testing cycles.

- How test automation coverage is calculated: Divide the number of automated tests by the total number of tests, then multiply by 100 to get a percentage.

- What tools can be used to get test automation coverage data: Frameworks like Selenium (https://www.selenium.dev) and TestComplete (https://smartbear.com/product/testcomplete/overview/) are widely used.

- What average, good, and best in class look like for test automation coverage:

- Average: 30-50%

- Good: 50-70%

- Best in class: >70%

How to track metrics for software quality

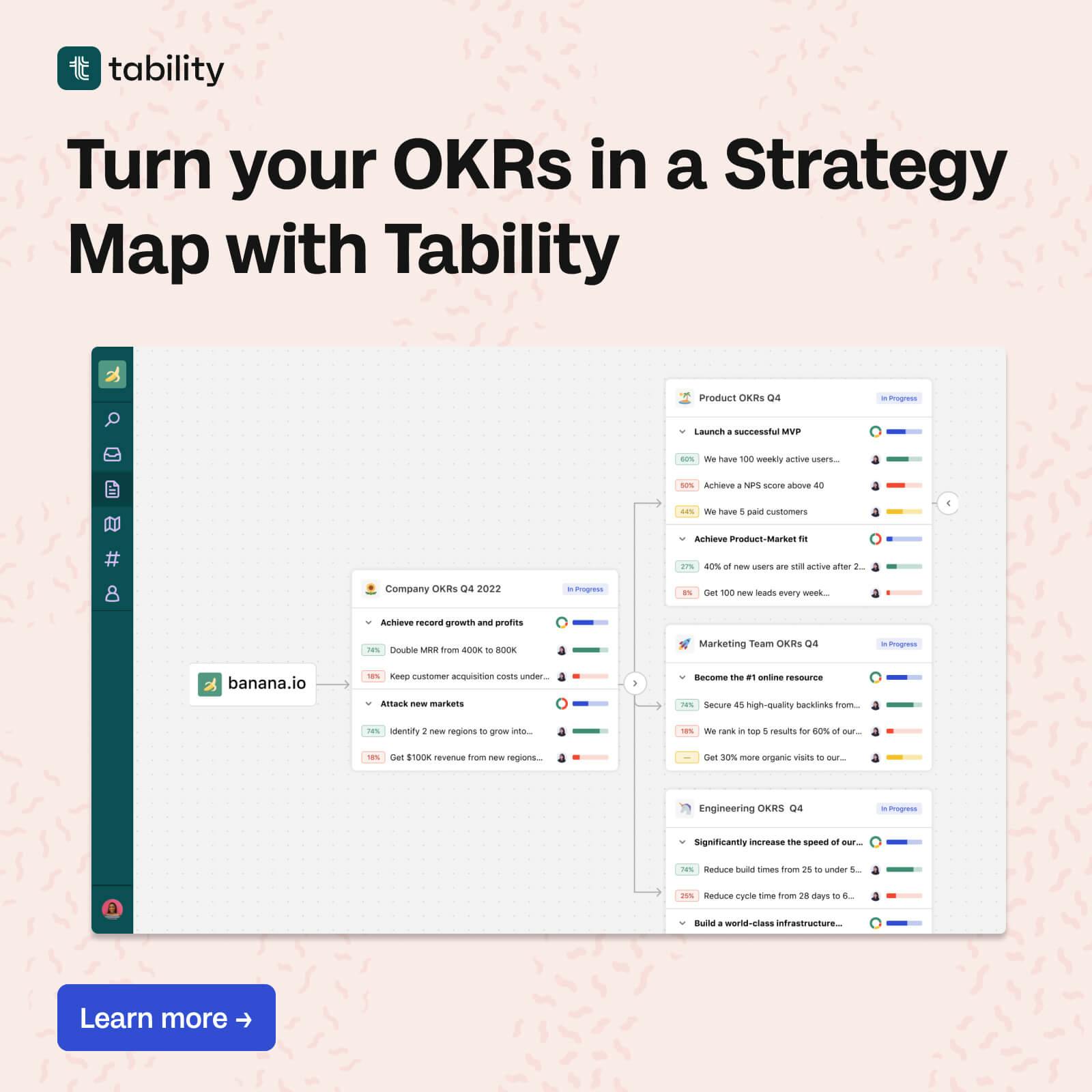

Tracking metrics effectively can be time-consuming and challenging without the right tools. To streamline the process, consider using a goal-tracking tool like Tability. Tability can help your team stay focused on the right metrics, providing a collaborative platform to track progress and make data-driven decisions to improve software quality. By having a clear view of your metrics, you can identify areas that need attention and act promptly to ensure consistent improvement.

FAQ

Q: How often should we review our software quality metrics?

A: It's generally a good practice to review software quality metrics on a regular basis, such as weekly or bi-weekly. More frequent reviews allow for quicker identification and resolution of any issues, ensuring the team stays on track with quality goals.

Q: Can these metrics be used for any type of software development project?

A: Yes, these metrics can be applied across various types of software development projects, including web applications, mobile apps, and enterprise software. Although the specific targets for each metric may vary based on the nature and complexity of the project, the general principles of measuring and improving quality remain consistent.

Q: What should we do if one of our metrics is below the desired target?

A: If a metric is below the desired target, the team should investigate the underlying causes. This might involve conducting root cause analysis, examining current processes, or seeking feedback from team members. Once the issues are identified, implement corrective actions and monitor the results to ensure improvement.

Q: Can we prioritise certain metrics over others?

A: Absolutely. Depending on your project's specific goals and current challenges, you may choose to prioritise certain metrics over others. It's essential to focus on the metrics that align most closely with your project objectives and have the most significant impact on the overall software quality.